In this Blog Embedded Systems Interview Questions and answers.

1. What is Embedded System?

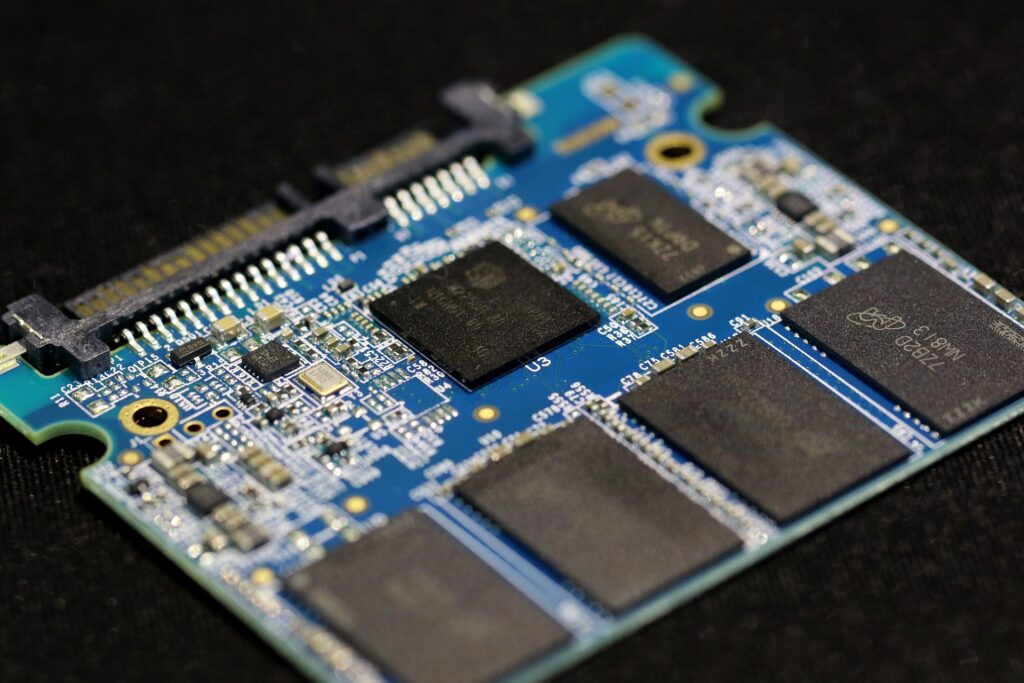

An embedded system is a computer system that is designed to perform a specific task or set of tasks, often with real-time constraints, within a larger system or product. It is a combination of hardware and software that is designed to perform a specific function or set of functions, and it is usually integrated into a larger system or product to provide functionality. Embedded systems can be found in a wide range of products and systems, such as consumer electronics, automobiles, medical devices, industrial control systems, and more. They are often low-power and have limited resources such as memory, processing power, and input/output capabilities, and are designed to operate with minimal human intervention.

2. What are the components of an embedded system?

The components of an embedded system may vary depending on the specific application and requirements, but typically include:

- Microcontroller/microprocessor: The main computing unit that performs the processing and control functions.

- Memory: Program memory to store the program code, data memory to store the data used by the program, and possibly other types of memory, such as EEPROM or flash memory for storing configuration information or firmware updates.

- Input/output (I/O) interfaces: These provide the means for the embedded system to communicate with the outside world. This may include digital and analog input/output, communication interfaces like UART, SPI, I2C, Ethernet, USB, etc.

- Timers and counters: These are used to measure time or count events and can be used for tasks like generating interrupts, controlling PWM outputs, and controlling the frequency of an oscillator.

- Analog-to-digital converters (ADCs): These convert analog signals to digital data that can be processed by the microcontroller.

- Digital-to-analog converters (DACs): These convert digital data to analog signals that can be used to control analog systems.

- Watchdog timers: These are used to reset the system in case of a software or hardware failure.

- Real-time clock (RTC): This is a clock that keeps track of time even when the system is powered off and is used for time-stamping events or scheduling tasks.

- Power supply: This provides the power needed to operate the system and may include a battery, a power converter, or other power management components.

- Peripherals: These may include devices like displays, sensors, motors, actuators, and other components that are used to interface with the system and perform specific tasks.

Overall, these components work together to provide the functionality needed by the embedded system, with the microcontroller/microprocessor serving as the brain of the system and the other components providing the means for it to interact with the world around it.

3. What is Micro Processor?

A microprocessor is a central processing unit (CPU) that is designed to be used in a small, integrated circuit package. It contains the arithmetic logic unit (ALU), registers, and control unit that are needed to perform basic arithmetic and logical operations, as well as the control and sequencing of instructions. Microprocessors are used in a wide variety of electronic devices, from personal computers to smartphones, to control systems, and more.

The first microprocessor, the Intel 4004, was introduced in 1971, and it had a clock speed of 740 kHz and was capable of executing up to 92,000 instructions per second. Since then, microprocessors have become significantly more powerful and efficient, with clock speeds reaching several GHz and executing billions of instructions per second. They are the heart of most modern computing and control systems, and are critical components in many embedded systems.

4. What is Microcontroller?

A microcontroller is a type of microprocessor that is designed to be used in embedded systems, where it provides the computing power and control functions needed to perform specific tasks. Unlike general-purpose microprocessors, which are used in a wide range of applications, microcontrollers are designed for specific applications and are optimized for low power consumption, small size, and low cost.

A typical microcontroller includes a CPU, memory (both program memory and data memory), input/output (I/O) interfaces, timers and counters, and other peripherals, such as analog-to-digital converters (ADCs) and digital-to-analog converters (DACs). These components are all integrated onto a single chip, making it easy to program and control the microcontroller.

Microcontrollers are widely used in a variety of applications, including automotive systems, industrial control systems, medical devices, consumer electronics, and more. Because they are optimized for specific tasks and have low power consumption, they are ideal for use in battery-powered devices or other systems where power efficiency is important.

5. What is the difference between a microcontroller and a microprocessor?

The main difference between a microcontroller and a microprocessor is that a microcontroller is a complete computing system on a single chip, while a microprocessor is just the central processing unit (CPU) of a computer system.

Microcontrollers typically have built-in memory (both program memory and data memory), input/output (I/O) interfaces, timers and counters, and other peripherals, such as ADCs and DACs, all integrated onto a single chip. This makes them ideal for use in embedded systems, where space, power consumption, and cost are important considerations.

In contrast, microprocessors require additional components to be added to the system to perform tasks beyond basic computing functions. These may include memory chips, I/O controllers, and other peripherals. Microprocessors are typically used in general-purpose computing applications, such as desktop and laptop computers, where the system requirements are more complex and varied.

Overall, microcontrollers are designed for specific applications and are optimized for low power consumption, small size, and low cost, while microprocessors are designed for more general-purpose computing tasks and require additional components to function in a complete system.

6. What is the purpose of an interrupt in an embedded system?

In an embedded system, an interrupt is a signal that indicates to the microcontroller or microprocessor that an event has occurred and needs immediate attention. The purpose of an interrupt is to allow the processor to temporarily suspend its current task and execute a separate interrupt service routine (ISR) to handle the event. Once the ISR is completed, the processor resumes its previous task.

Interrupts are important in embedded systems because they allow the processor to respond quickly to events, without having to constantly check for them in a loop. This saves processing time and makes the system more efficient. Interrupts can be triggered by a variety of events, including hardware events like input signals, timer events, or errors, and software events like exceptions or system calls.

Interrupts are commonly used in embedded systems for tasks such as:

- Handling real-time events: Interrupts can be used to handle time-critical events like input signals, sensor readings, or external interrupts.

- Managing system resources: Interrupts can be used to manage system resources like memory, I/O ports, and timers.

- Implementing multitasking: Interrupts can be used to implement multitasking by allowing the processor to switch between tasks based on priority or other criteria.

- Improving power management: Interrupts can be used to implement power-saving techniques like sleep mode or power-down mode, where the processor can be put into a low-power state until an interrupt occurs.

Overall, interrupts play a critical role in the efficient operation of embedded systems, allowing them to respond quickly and accurately to events and perform their tasks with maximum efficiency.

7. Explain the role of timers and counters in an embedded system.

Timers and counters are important components of an embedded system that are used for a variety of tasks, including measuring time, generating delays, generating pulse-width modulation (PWM) signals, and counting events.

In an embedded system, timers are typically used for measuring the duration of time intervals or generating delays. Timers can be programmed to generate interrupts at specific time intervals or can be used to trigger events after a specific amount of time has elapsed. They are commonly used for tasks such as controlling the frequency of a signal, timing the duration of a pulse, or measuring the time between events.

Counters, on the other hand, are used for counting the number of events that occur over a specific period of time. Counters can be used to measure the frequency of a signal, count the number of pulses in a train, or track the number of rotations of a motor or other device. Counters can also be used in combination with timers to generate PWM signals, which are used for controlling the speed of motors, dimming LEDs, or controlling other devices that require variable output levels.

Both timers and counters are typically implemented as hardware components on microcontrollers or microprocessors, and are often integrated with other components, such as input/output (I/O) interfaces and interrupt controllers, to provide a complete system for controlling and monitoring the operation of the embedded system.

Overall, timers and counters play a critical role in the functionality of embedded systems, providing precise and accurate timing and counting capabilities that are essential for a wide range of applications.

8. What is the difference between analog and digital signals?

Analog and digital signals are two types of electrical signals used to represent information in electronic devices.

An analog signal is a continuous signal that varies in amplitude or frequency over time. It is represented by a continuous waveform that can take on any value within a specific range. Analog signals are used to represent physical quantities that vary continuously, such as sound, light, temperature, or pressure.

A digital signal, on the other hand, is a discrete signal that can only take on specific values. It is represented by a series of binary digits (bits) that can have only two states: 0 or 1. Digital signals are used to represent information in a computer or other digital device, and are typically processed using digital logic circuits.

The main difference between analog and digital signals is that analog signals are continuous and can take on any value within a specific range, while digital signals are discrete and can only take on specific values (typically 0 or 1). Analog signals are typically used for tasks that require the representation of continuous physical quantities, such as sound or temperature, while digital signals are used for tasks that involve the processing and storage of information in a computer or other digital device.

Another important difference between analog and digital signals is that analog signals are susceptible to noise and interference, which can cause distortion or degradation of the signal, while digital signals are less susceptible to noise and can be transmitted over long distances without significant degradation.

Overall, both analog and digital signals have their own unique advantages and disadvantages, and their suitability for a particular application depends on the specific requirements of the system.

9. What is the purpose of a watchdog timer?

A watchdog timer is a hardware timer that is used to monitor the operation of an embedded system and ensure that it is functioning properly. The purpose of a watchdog timer is to provide a failsafe mechanism that can detect and recover from software or hardware failures that may cause the system to hang or malfunction.

The watchdog timer works by periodically resetting a timer counter that is built into the system. If the system is functioning properly, it will periodically reset the timer counter before it reaches a predefined threshold. However, if the system hangs or becomes unresponsive, the timer counter will continue to count up until it reaches the threshold, at which point the watchdog timer will generate a system reset signal, causing the system to reboot.

The watchdog timer is typically programmed to a timeout value that is longer than the normal processing time of the system, but shorter than the maximum allowable delay before the system is considered to have failed. This ensures that the watchdog timer can detect and recover from failures without causing unnecessary resets.

Overall, the purpose of a watchdog timer is to provide a mechanism for detecting and recovering from failures in an embedded system, and to ensure that the system is operating reliably and safely. By monitoring the operation of the system and providing a failsafe mechanism for detecting and recovering from failures, the watchdog timer can help to improve the robustness and reliability of embedded systems in a wide range of applications.

10. Explain the concept of polling versus interrupts.

Polling and interrupts are two different mechanisms used in embedded systems to communicate with external devices or sensors.

Polling is a mechanism where the processor repeatedly checks the status of an input device to determine if it has changed state. In polling, the processor periodically reads the status of an input device or sensor, and then takes appropriate action based on the status. Polling can be done in a busy-wait loop, where the processor continuously checks the status of the input device, or in a periodic interrupt, where the processor is interrupted at regular intervals to check the status of the device.

Interrupts, on the other hand, are a mechanism where the processor is interrupted by an external device or sensor when an event occurs. When an interrupt is triggered, the processor temporarily suspends its current task and handles the interrupt. Interrupts can be generated by hardware or software, and can be triggered by a variety of events, such as a change in the state of an input device, a timer timeout, or a software command.

The main difference between polling and interrupts is that with polling, the processor has to continuously check the status of the input device, which can consume a significant amount of processing time and may lead to delays or inefficiencies in the system. With interrupts, on the other hand, the processor is only interrupted when an event occurs, which can reduce processing overhead and improve system performance.

In general, interrupts are preferred over polling when the system needs to respond quickly to external events or when the processing time required for polling is significant. Polling may be preferred when the system has relatively slow external events or when the processing overhead required for interrupt handling is too high.

Overall, the choice between polling and interrupts depends on the specific requirements of the system, and both mechanisms can be used effectively in embedded systems depending on the application.

11. What is a Real-Time Operating System (RTOS)?

A Real-Time Operating System (RTOS) is a type of operating system that is designed to handle time-critical applications and tasks with precise timing requirements. An RTOS is typically used in embedded systems and other real-time applications where the system must respond to events or signals within a specific time frame.

An RTOS provides a number of features that are designed to support real-time processing, including:

- Task scheduling: An RTOS provides a scheduler that manages the allocation of system resources to tasks or processes. The scheduler ensures that high-priority tasks are executed first and that lower-priority tasks are executed only when the system has available resources.

- Interrupt handling: An RTOS provides mechanisms for handling interrupts and other events with minimal latency. Interrupts can be assigned different priorities, and the system can be configured to pre-empt lower-priority tasks to handle higher-priority interrupts.

- Memory management: An RTOS provides memory management capabilities that ensure that tasks do not interfere with each other or with the kernel. The memory management system can include features such as memory protection, memory allocation and deallocation, and memory mapping.

- Communication: An RTOS provides mechanisms for inter-task communication and synchronization. This can include features such as message passing, semaphores, and mutexes.

- Timer management: An RTOS provides mechanisms for managing system timers and scheduling time-critical tasks.

Overall, an RTOS is designed to provide a deterministic and predictable execution environment for real-time applications. It can help to ensure that time-critical tasks are executed within a specific time frame and that system resources are managed efficiently to support high-performance processing.

12. How do you debug an embedded system?

Debugging an embedded system can be challenging due to the limited resources and lack of user interface. Here are some common techniques that can be used to debug an embedded system:

- Debugging tools: Debugging tools such as JTAG debuggers, logic analyzers, oscilloscopes, and debuggers can be used to monitor the system behavior and trace the program execution.

- Code instrumentation: Instrumenting the code with debug statements or log messages can help in identifying the source of errors and bugs. This can be done by adding printf statements or debug macros to the code.

- Code profiling: Profiling tools can be used to analyze the performance of the system and identify performance bottlenecks. This can be done by measuring the execution time of each function or task and identifying the functions that take the most time to execute.

- Hardware breakpoints: Hardware breakpoints can be used to stop the processor when specific conditions are met. For example, a breakpoint can be set to stop the processor when a specific memory location is accessed or when a specific instruction is executed.

- Simulation and emulation: Simulation and emulation tools can be used to simulate the behavior of the system and test the code before it is deployed on the hardware. This can help in identifying and fixing errors before they occur in the actual system.

- Trace analysis: Trace analysis tools can be used to capture and analyze the system behavior during run-time. This can help in identifying the source of errors and bugs that are difficult to reproduce in a test environment.

Overall, debugging an embedded system requires a combination of techniques and tools that are specific to the system and the application. It is important to have a good understanding of the system architecture, the software design, and the hardware behavior to effectively debug an embedded system.

13. What is the role of firmware in an embedded system?

Firmware is a type of software that is typically used to control the hardware in an embedded system. It is software that is stored on a non-volatile memory, such as a ROM, EEPROM, or flash memory, and is executed by the processor to control the system behavior.

The role of firmware in an embedded system can include the following:

- Initializing the hardware: Firmware is responsible for initializing the hardware components, such as the microcontroller, timers, and peripherals, during system startup.

- Controlling system behavior: Firmware is used to control the system behavior and implement the required functionality. This can include tasks such as reading inputs, processing data, and generating outputs.

- Managing power consumption: Firmware can be used to implement power management features, such as sleep modes, to reduce power consumption and extend battery life.

- Managing system updates: Firmware can be used to manage system updates and upgrades by providing mechanisms for updating the firmware and managing the versioning and compatibility of the firmware.

- Providing security: Firmware can be used to provide security features, such as encryption and authentication, to protect the system from unauthorized access and attacks.

Overall, firmware plays a critical role in an embedded system by providing the low-level software that is required to control the hardware and implement the required functionality. It is important to have well-designed and robust firmware to ensure the reliable and efficient operation of the system.

14. What is an ADC (Analog-to-Digital Converter)?

An Analog-to-Digital Converter (ADC) is an electronic circuit that converts an analog signal, such as voltage or current, into a digital signal that can be processed by a digital system, such as a microcontroller or computer.

The ADC samples the input signal at regular intervals and quantizes the signal into discrete digital values. The digital values are represented by a binary number that corresponds to the analog value of the input signal.

ADCs are commonly used in embedded systems to convert analog signals from sensors, such as temperature sensors, pressure sensors, and light sensors, into digital values that can be processed by the microcontroller or computer. The accuracy and resolution of the ADC determine the quality of the digital signal and the accuracy of the measurements.

There are several types of ADCs, including successive approximation ADCs, sigma-delta ADCs, and flash ADCs, each with their own advantages and disadvantages. The choice of ADC depends on the specific requirements of the application, such as the input signal range, resolution, and speed.

15. What is a DAC (Digital-to-Analog Converter)?

A Digital-to-Analog Converter (DAC) is an electronic circuit that converts a digital signal into an analog signal. It takes in a binary digital input and outputs a continuous analog voltage or current signal that can be used to control analog circuits, such as amplifiers, motors, or lights.

The DAC works by converting the digital signal into a series of analog voltage or current levels. The output signal is a stepwise approximation of the original analog signal, where the number of steps and the resolution of each step depend on the number of bits in the digital input.

DACs are commonly used in embedded systems to generate analog waveforms for audio and video applications, or to control the output of analog sensors and actuators. The accuracy and resolution of the DAC determine the quality of the output signal and the fidelity of the analog control.

There are several types of DACs, including binary-weighted DACs, R-2R ladder DACs, and sigma-delta DACs, each with their own advantages and disadvantages. The choice of DAC depends on the specific requirements of the application, such as the output signal range, resolution, and speed.

16. What are the advantages of using an RTOS in an embedded system?

There are several advantages of using a Real-Time Operating System (RTOS) in an embedded system, including:

- Deterministic behavior: An RTOS provides deterministic behavior by guaranteeing that tasks will be executed within a specific time frame, making it suitable for real-time applications.

- Task scheduling: An RTOS can manage and schedule tasks, ensuring that they are executed in an optimized manner, and allowing multiple tasks to run concurrently.

- Resource management: An RTOS can manage system resources, such as memory and peripherals, ensuring that they are shared efficiently between tasks.

- Error handling: An RTOS can handle errors and exceptions, providing a mechanism for tasks to recover from errors and continue executing.

- Code reusability: An RTOS provides a standard framework for developing embedded applications, allowing code to be reused across multiple projects.

- Scalability: An RTOS can be scaled to meet the requirements of the application, allowing it to handle multiple tasks and more complex systems.

- Ease of development: An RTOS provides a standardized framework for developing embedded applications, reducing the complexity of the development process and facilitating code reuse.

Overall, an RTOS can provide a robust and efficient framework for developing embedded applications, enabling developers to focus on the application logic rather than the low-level system details. It is particularly useful in real-time applications where timing and determinism are critical.

17. How do you optimize code for memory usage and speed in an embedded system?

Optimizing code for memory usage and speed in an embedded system involves several techniques, including:

- Use of efficient algorithms: Choose the most efficient algorithm that suits the requirements of the application, and optimize the code to reduce redundant operations.

- Minimize use of global variables: Use of global variables can consume memory space and slow down the execution of the code. Instead, use local variables or parameters wherever possible.

- Optimize data structures: Use data structures that minimize memory usage, such as arrays instead of linked lists, and avoid padding or alignment that wastes memory.

- Use compiler optimization: Use compiler optimizations such as -O2 or -O3 to optimize code for speed and reduce code size.

- Use inline functions: Use inline functions for frequently used code to reduce function call overhead.

- Minimize code size: Remove unused code and functions, use conditional compilation to exclude code that is not needed for the specific configuration, and optimize the code for size using compiler flags such as -Os.

- Use hardware peripherals efficiently: Use hardware peripherals such as DMA and interrupts to offload processing from the CPU, reducing the execution time and freeing up the CPU for other tasks.

- Profile and optimize: Use profiling tools to identify performance bottlenecks in the code, and optimize those sections to improve overall performance.

Optimizing code for memory usage and speed in an embedded system requires a balance between performance, memory usage, and code complexity. The specific techniques used will depend on the requirements of the application and the capabilities of the hardware.

18. What is the difference between a UART and an SPI interface?

UART (Universal Asynchronous Receiver/Transmitter) and SPI (Serial Peripheral Interface) are two common serial communication interfaces used in embedded systems. The main differences between UART and SPI are:

- Communication Method: UART is an asynchronous communication protocol, which means that data is transmitted one byte at a time with start and stop bits. SPI, on the other hand, is a synchronous communication protocol, which means that data is transmitted in a continuous stream with a clock signal to synchronize the data transmission.

- Number of wires: UART requires two wires for communication – a transmit (TX) and a receive (RX) wire, while SPI requires four wires for communication – a clock wire, a chip select wire, a MOSI (Master Output Slave Input) wire, and a MISO (Master Input Slave Output) wire.

- Data Rate: SPI typically supports faster data rates compared to UART because it is a synchronous communication protocol, and the clock signal provides a faster data transfer rate.

- Distance: UART is generally used for short-distance communication, while SPI can be used for longer distance communication.

- Multimaster support: SPI supports a multimaster environment, meaning multiple masters can be connected to the same bus, while UART is typically used in a single master environment.

In summary, UART is a simple, low-cost, and commonly used interface for asynchronous communication, while SPI is a faster, synchronous interface that is useful for communicating with devices that require higher data rates and more complex communication. The choice between UART and SPI will depend on the specific requirements of the application, including factors such as data rate, distance, and complexity.

19. How do you implement communication between two embedded systems?

There are several ways to implement communication between two embedded systems, depending on the specific requirements of the application. Here are some common methods:

- UART: UART is a simple and commonly used communication interface for short-range communication between embedded systems. One system acts as the transmitter and the other as the receiver, with data sent in a serial format over a single wire or a pair of wires.

- SPI: SPI is a synchronous communication interface that is commonly used for communication between embedded systems that require high-speed data transfer. SPI requires four wires for communication: a clock wire, a chip select wire, a MOSI (Master Output Slave Input) wire, and a MISO (Master Input Slave Output) wire.

- I2C: I2C (Inter-Integrated Circuit) is a low-speed, two-wire communication interface that is commonly used for communication between embedded systems. I2C uses a master-slave architecture, with the master initiating communication and the slaves responding to requests.

- CAN: CAN (Controller Area Network) is a high-speed, differential communication protocol commonly used in automotive and industrial applications for communication between embedded systems. CAN supports a multimaster environment, with up to 127 nodes connected to the same bus.

- Ethernet: Ethernet is a widely used communication protocol that enables communication over a network. It is commonly used in embedded systems that require communication over longer distances, such as industrial automation and IoT applications.

The choice of communication method will depend on the specific requirements of the application, including factors such as data rate, distance, power consumption, and cost.

20. What is DMA (Direct Memory Access)?

DMA (Direct Memory Access) is a method used in embedded systems to transfer data between peripherals and memory without involving the CPU. In a typical data transfer scenario, the CPU would have to initiate and manage the transfer, which can take up significant processing time and slow down the system. DMA, on the other hand, allows data to be transferred directly between the peripheral and memory, freeing up the CPU to perform other tasks.

When a DMA transfer is initiated, the DMA controller takes control of the system bus and directly accesses memory and the peripheral, transferring data between the two without CPU intervention. This allows for faster data transfer rates and more efficient use of system resources.

DMA can be especially useful in applications where large amounts of data need to be transferred between peripherals and memory, such as video or audio processing. DMA can also help to reduce power consumption in embedded systems by allowing the CPU to enter low-power modes when data transfers are being handled by the DMA controller.

However, DMA requires careful configuration and management to ensure that it is properly synchronized with the rest of the system and does not cause conflicts or errors. DMA controllers are typically integrated into microcontrollers or SoCs (System-on-Chip), and their operation is controlled through software drivers.

21. What are the differences between a CAN and a LIN bus?

CAN (Controller Area Network) and LIN (Local Interconnect Network) are two communication protocols commonly used in automotive and industrial applications.

CAN is a high-speed, differential bus that supports data rates up to 1 Mbps and is used for real-time, peer-to-peer communication between multiple nodes on a network. CAN is a multimaster bus, meaning that multiple nodes can transmit messages on the bus, and messages are prioritized based on their identifier. CAN supports error detection and correction mechanisms, ensuring reliable data transmission over a noisy bus.

LIN, on the other hand, is a low-speed, single-wire bus that supports data rates up to 20 Kbps and is typically used for control and monitoring applications in automotive systems. LIN is a single-master bus, meaning that only one node on the network can transmit messages, and messages are transmitted in a pre-defined order. LIN does not support error detection and correction mechanisms, and relies on the application layer to ensure data reliability.

The differences between CAN and LIN make them suitable for different types of applications. CAN is typically used in applications that require real-time communication, such as engine control systems, while LIN is used in applications that require simple and low-cost communication, such as powertrain and body control systems.

In summary, the main differences between CAN and LIN are:

- CAN is a high-speed, differential bus, while LIN is a low-speed, single-wire bus.

- CAN supports data rates up to 1 Mbps, while LIN supports data rates up to 20 Kbps.

- CAN is a multimaster bus, while LIN is a single-master bus.

- CAN supports error detection and correction mechanisms, while LIN does not.

22. How do you implement power management in an embedded system?

Implementing power management in an embedded system involves reducing power consumption while ensuring that the system is still able to meet its performance and functional requirements. Here are some general steps for implementing power management in an embedded system:

- Understand power requirements: Before implementing power management, it is important to understand the power requirements of the system, including the expected power consumption of each component and the power supply capacity.

- Identify power-saving opportunities: Identify components or subsystems in the system that can be optimized for power savings. For example, unused peripherals or sensors can be put into low-power sleep modes when not in use, and the CPU clock frequency can be dynamically adjusted based on system workload.

- Implement low-power modes: Most microcontrollers and SoCs provide low-power modes that allow components to be powered down or put into sleep mode. Implementing these modes can significantly reduce power consumption while maintaining functionality.

- Use power-efficient components: Select components, such as sensors, display screens, and wireless modules, that are designed to be power efficient. This can reduce overall power consumption and extend battery life.

- Optimize software: Optimize software to reduce CPU utilization and avoid unnecessary wakeups from low-power modes. For example, use efficient algorithms and optimize code for minimal memory usage.

- Test and validate power management: Test the power management implementation to ensure that it meets the power requirements of the system and does not compromise functionality or performance.

Overall, implementing power management in an embedded system requires a careful balance between power consumption and functionality. By following these steps and using power-efficient components and software optimizations, significant power savings can be achieved while maintaining system performance and functionality.

Read My other blogs:

Embedded C language Interview Questions.

Automotive Interview Questions